When Hype Hits Reality: The Spectacular Misfire of a Peter Thiel-Backed AI Drone Startup

In the high-stakes world of tech startups, venture capital, and cutting-edge innovation, few names carry as much weight as Peter Thiel. His firm, Founders Fund, has a legendary track record of backing disruptive companies that reshape industries. So, when they invest in a defense-tech startup promising to revolutionize warfare with autonomous, AI-powered drones, the world takes notice. But what happens when the Silicon Valley mantra of “move fast and break things” collides with the unforgiving reality of military-grade expectations? You get the story of Stark, a cautionary tale of hype, ambition, and a spectacular failure to launch.

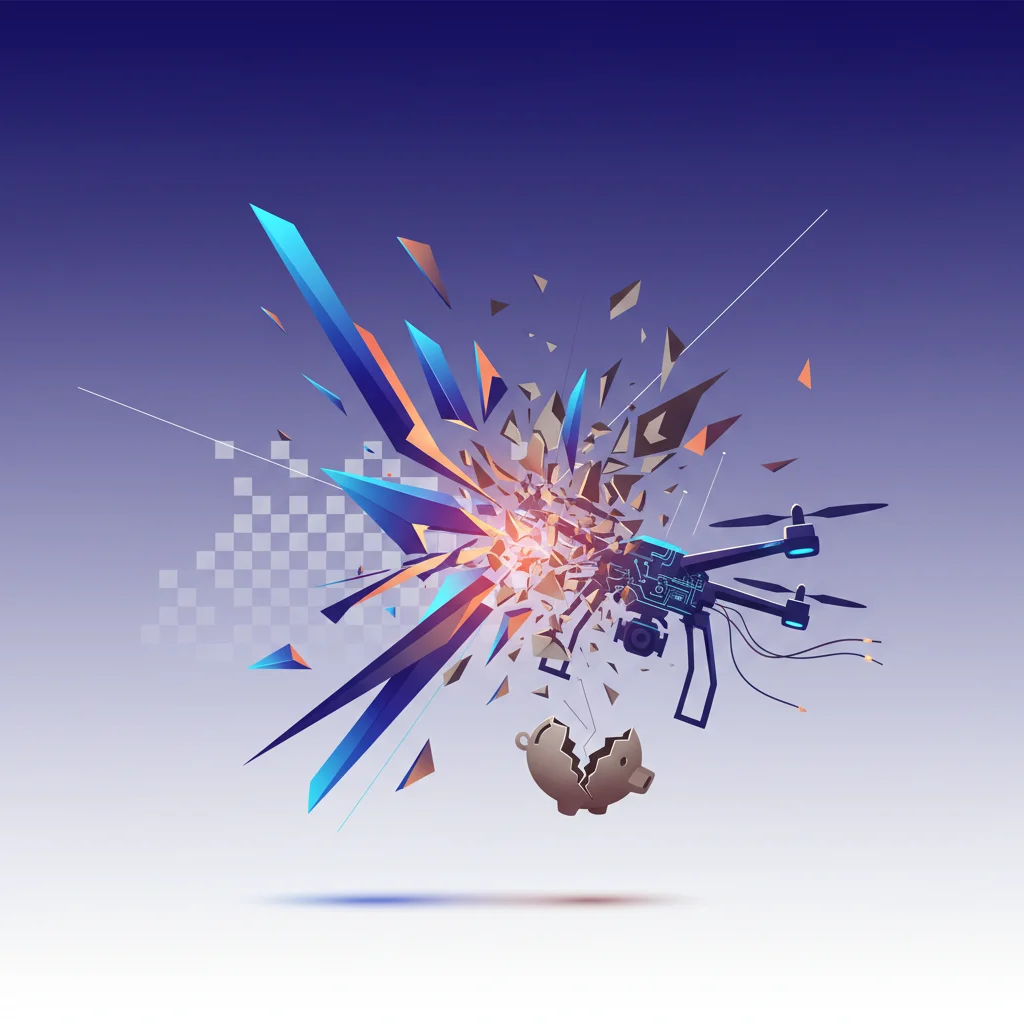

The Berlin-based startup, armed with significant funding and a bold vision, recently underwent critical trials with both the British and German armed forces. The goal was to demonstrate its “intelligent, networked, and expendable” drones. The outcome? According to a bombshell report from the Financial Times, Stark’s drones failed to hit a single target in four separate attempts. It was a complete and utter misfire, sending shockwaves through the burgeoning defense-tech sector and raising critical questions about the readiness of AI for the battlefield.

This isn’t just another story of a failed product demo. It’s a crucial case study for entrepreneurs, developers, and investors alike, revealing the deep chasm between a compelling pitch deck and a product that works under pressure. Let’s deconstruct what happened, why it matters, and the hard-learned lessons it offers for the future of artificial intelligence and autonomous systems.

The Seductive Promise of AI-Powered Warfare

To understand the magnitude of this failure, we first need to appreciate the promise. Stark wasn’t just building another remote-controlled drone. They were operating in the realm of loitering munitions—often called “kamikaze drones”—a class of weapons designed to autonomously hunt for and destroy targets. This is where the power of modern AI and machine learning comes into play.

The vision is compelling: a swarm of low-cost, intelligent drones that can be launched from a distance, enter a conflict zone, and use onboard processing to identify, track, and engage enemy targets without constant human intervention. This represents a paradigm shift in military capability, promising to:

- Increase Soldier Safety: Keeping human operators far from the front lines.

- Enhance Precision: Using sophisticated algorithms to minimize collateral damage.

- Overwhelm Defenses: Deploying swarms that are difficult for traditional air defense systems to counter.

- Automate the Kill Chain: Drastically reducing the time from target identification to engagement.

This is the future that venture capitalists and military leaders are betting on. The integration of advanced software, running on powerful edge-computing hardware and connected via resilient cloud-like networks, is seen as the next frontier of defense innovation. Stark, with its high-profile backing, was positioned as a frontrunner in this race. But their performance in the field tells a very different story.

The Billion Bet on AI's Energy Problem: Why Crusoe's Funding is a Game-Changer

From Pitch Deck to Proving Ground: A Tale of Four Misses

The true test of any technology, especially in the defense sector, isn’t in a controlled lab environment—it’s on the proving ground. Stark’s drones were put through their paces in two separate exercises, one with the British Army and another with Germany’s armed forces. The results were stark indeed.

Here’s a clear breakdown of the reported performance during these crucial military trials:

| Military Force | Exercise Location | Number of Attempts | Successful Hits | Outcome |

|---|---|---|---|---|

| British Army | United Kingdom | 2 | 0 | Total Failure |

| German Bundeswehr | Germany | 2 | 0 | Total Failure |

| Total | – | 4 | 0 | 0% Success Rate |

A zero-percent success rate in a live-fire demonstration is more than just a setback; it’s a catastrophic failure that obliterates credibility. While the exact technical reasons haven’t been made public, experts can speculate on a range of potential failure points common in such complex systems. These could include software bugs in the guidance system, failures in the machine learning models for target recognition, susceptibility to electronic warfare, or simple hardware malfunctions under the stress of flight. As one person familiar with the trials bluntly told the FT, the technology was “some way from being battlefield-ready.”

The Bigger Picture: Sobering Lessons for Tech and Defense Startups

While it’s easy to focus on this single company’s failure, the real value lies in the broader lessons for the entire ecosystem of startups, developers, and investors working at the intersection of technology and defense.

Lesson 1: The Culture Clash Between Silicon Valley and the Military

The tech industry thrives on agility, iteration, and launching a “minimum viable product” (MVP). This “fail fast, learn faster” approach is fantastic for developing web apps, but it’s dangerously incompatible with military applications. In defense, reliability, security, and predictability are paramount. A weapon system cannot be 99% reliable; it must approach 100% under the most adverse conditions imaginable. This requires a fundamentally different approach to development, testing, and quality assurance—one that many startups, accustomed to rapid-fire release cycles, are ill-equipped to handle.

Lesson 2: The Perilous “Valley of Death”

In defense procurement, there’s a notorious gap known as the “valley of death.” This is the chasm between developing a successful prototype and securing a large-scale, long-term production contract. Many promising companies die in this valley, running out of private funding before they can navigate the labyrinthine bureaucracy of government acquisition. Stark’s public failure during trials is a vivid example of a company stumbling badly within this valley. It demonstrates that even with elite backing, a failure to meet the military’s stringent performance standards can be a death knell. According to a Founders Fund statement, they encourage “ambitious R&D timelines,” but this episode shows the risk of that ambition outstripping execution.

Code Red on the Assembly Line: How One Cyber-Attack Drove UK Car Production to a 70-Year Low

Lesson 3: The Immense Burden of AI Ethics and Cybersecurity

When you’re building a system that automates the decision to use lethal force, the ethical stakes are astronomical. An error in a commercial recommendation algorithm suggests the wrong movie; an error in a lethal autonomous weapon system could lead to tragic and irreversible consequences. This places an immense burden on developers to ensure their AI models are robust, unbiased, and explainable. Furthermore, the cybersecurity implications are terrifying. A weapon system that relies on complex software and network connectivity is a prime target for adversaries. Ensuring that these systems are hardened against hacking isn’t just a feature—it’s a fundamental requirement for national security.

Is the Future of Autonomous Defense Grounded?

Does Stark’s spectacular crash mean the dream of AI-powered defense is dead? Absolutely not. But it does serve as a much-needed dose of reality. The hype cycle for artificial intelligence has been in overdrive, and this incident is a powerful reminder that progress is not always a smooth, upward curve. True, sustainable innovation in this sector won’t come from flashy demos and bold promises alone.

It will come from a hybrid approach that marries the agility and ingenuity of the startup world with the discipline, rigor, and operational expertise of the defense establishment. It requires patient capital that understands the long, arduous path from concept to deployment. And most importantly, it requires engineers and developers who have a profound respect for the complexity and responsibility of what they are building.

The integration of AI, automation, and advanced software will undoubtedly continue to shape the future of defense. But the path forward must be paved with exhaustive testing, transparent validation, and a healthy skepticism of anyone who claims to have solved the challenges of warfare with a clever algorithm alone. Stark’s story is not the end of the chapter, but a crucial, cautionary footnote in the ongoing narrative of technology’s role in our world.

The Pardon That Shook the Tech World: Analyzing the Ripple Effects of Trump's Pardon of Binance's CZ